Not exactly self hosting but maintaining/backing it up is hard for me. So many “what if”s are coming to my mind. Like what if DB gets corrupted? What if the device breaks? If on cloud provider, what if they decide to remove the server?

I need a local server and a remote one that are synced to confidentially self-host things and setting this up is a hassle I don’t want to take.

So my question is how safe is your setup? Are you still enthusiastic with it?

Right now I just play with things at a level that I don’t care if they pop out of existence tomorrow.

If you want to be truly safe (at an individual level, not an institutional level where there’s someone with an interest in fucking your stuff up), you need to make sure things are recoverable unless 3 completely separate things go wrong at the same time (an outage at a remote data centre, your server fails and your local backup fails). Very unlikely for all 3 to happen simultaneously, but 1 is likely to fail and 2 is forseeable, so you can fix it before the 3rd also fails.

Exactly right there with the not worrying. Getting started can be brutal. I always recommend people start without worrying about it, be okay with the idea that you’re going to lose everything.

When you start really understanding how the tech works, then start playing with backups and how to recover. By that time you’ve probably set up enough that you are ready for a solution that doesn’t require setting everything up again. When you’re starting though? Getting it up and running is enough

Gonna just stream of consciousness some stuff here:

Been thinking lately, especially as I have been self-hosting more, how much work is just managing data on disk.

Which disk? Where does it live? How does the data transit from here to there? Why isn’t the data moving properly?

I am not sure what this means, but it makes me feel like we are missing some important ideas around data management at personal scale.

I just got a mini PC and put 5 disks in it and struggling with the same…

Absurdly safe.

Proxmox cluster, HA active. Ceph for live data. Truenas for long term/slow data.

About 600 pounds of batteries at the bottom of the rack to weather short power outages (up to 5 hours). 2 dedicated breakers on different phases of power.

Dual/stacked switches with lacp’d connections that must be on both switches (one switch dies? Who cares). Dual firewalls with Carp ACTIVE/ACTIVE connection…

Basically everything is as redundant as it can be aside from one power source into the house… and one internet connection into the house. My “single point of failures” are all outside of my hands… and are all mitigated/risk assessed down.

I do not use cloud anything… to put even 1/10th of my shit onto the cloud it’s thousands a month.

It’s quite robust, but it looks like everything will be destroyed when your server room burns down :)

Fire extinguisher is in the garage… literal feet from the server. But that specific problem is actually being addressed soon. My dad is setting up his cluster and I fronted him about 1/2 the capacity I have. I intend to sync longterm/slow storage to his box (the truenas box is the proxmox backup server target, so also collects the backups and puts a copy offsite).

Slow process… Working on it :) Still have to maintain my normal job after all.

Edit: another possible mitigation I’ve seriously thought about for “fire” are things like these…

https://hsewatch.com/automatic-fire-extinguisher/

Or those types of modules that some 3d printer people use to automatically handle fires…

Yeah I really like the “parent backup” strategy from @hperrin@lemmy.world :) This way it costs much less.

The real fun is going to be when he’s finally up and running… I have ~250TB of data on the Truenas box. Initial sync is going to take a hot week… or 2…

Edit: 23 days at his max download speed :(

Fine… a hot month and a half.

I’m doing something similar (with a lot less data), and I’m intending on syncing locally the first time to avoid this exact scenario.

Different phases of power? Did you have 3-phase ran to your house or something?

You could get a Starlink for redundant internet connection. Load balancing / fail over is an interesting challenge if you like to DIY.

In the US at least, most equipment (unless you get into high-and datacenter stuff) runs on 120V. We also use 240V power, but a 240V connection is actually two 120V phases 180-degrees out of sync. The main feed coming into your home is 240V, so your breaker panel splits the circuits evenly between the two phases. Running dual-phase power to a server rack is as simple as just running two 120V circuits from the panel.

My rack only receives a single 120V circuit, but it’s backed up by a dual-conversion UPS and a generator on a transfer switch. That was enough for me. For redundancy, though, dual phases, each with its own UPS, and dual-PSU servers are hard ro beat.

Exactly this. 2 phase into house, batteries on each leg. While it would be exceedingly rare for just one phase to go out… i can in theory weather that storm indefinitely.

Nope 240. I have 2x 120v legs.

I actually had verizon home internet (5g lte) to do that… but i need static addresses for some services. I’m still working that out a bit…

Couldn’t you use a VPS as the public entry point?

I could… But it would be a royal pain in the ass to find a VPS that has a clean address to use (especially for email operations).

You should edit you post to make this sound simple.

“just a casual self hoster with no single point of failure”

Nah, that’d be mean. It isn’t “simple” by any stretch. It’s an aggregation of a lot of hours put into it. What’s fun is that when it gets that big you start putting tools together to do a lot of the work/diagnosing for you. A good chunk of those tools have made it into production for my companies too.

LibreNMS to tell me what died when… Wazuh to monitor most of the security aspects of it all. I have a gitea instance with my own repos for scripts when it comes maintenance time. Centralized stuff and a cron stub on the containers/vms can mean you update all your stuff in one go

Absurdly safe.

[…] Ceph

For me these two things are exclusive of each other. I had nothing but trouble with Ceph.

Ceph has been FANTASTIC for me. I’ve done the dumbest shit to try and break it and have had great success recovering every time.

The key in my experience is OODLES of bandwidth. It LOVES fat pipes. In my case 2x 40Gbps link on all 5 servers.

It depends on how you set it up. Most people do it wrong.

What does your internal network look like for ceph?

40 ssds as my osds… 5 hosts… all nodes are all functions (monitor/manager/metadataservers), if I added more servers I would not add any more of those… (which I do have 3 more servers for “parts”/spares… but could turn them on too if I really wanted to.

2x 40gbps networking for each server.

Since upstream internet is only 8gbps I let some vms use that bandwidth too… but that doesn’t eat into enough to starve Ceph at all. There’s 2x1gbps for all the normal internet facing services (which also acts as an innate rate limiter for those services).

My incredible hatred and rage for not understanding things powers me on the cycle of trying and failing hundreds of times till I figure it out. Then I screw it all up somehow and the cycle begins again.

All of your issues can be solved by a backup. My host went out of business. I set up a new server, pulled my backups, and was up and running in less than an hour.

I’d recommend docker compose. Each service gets its own folder inside your docker folder. All volumes are a folder in the services folder. Each night, run a script that stops all of them, starts duplicati, backs up to a remote server or webdav share or whatever, and then starts them back up again. If you want to be extra safe, back up to two locations. It’s not that complicated if it’s just your own services.

I have a rack in my garage.

My advice, keep it simple, keep it virtual.

I dumpster dove for hardware and run proxmox on hosts. Not even clustered, just simple stand alone proxmox hosts. Connect to my Synology storage device and done.

I run next cloud for webDav contacts and calendar (fuck Google), it does photo and do. Storage. The next client is free from F-Droid for Android and works on debian desktops like a charm.

I run Minecraft server

I run home automation server

I run a media server.

Proxmox backs everything up on schedule

All I need to do is get off-site backup setup for Synology important data and I’m all set.

It’s really not as hard as you think if you keep it simple

Others have said this, but it’s always a work in progress.

What started out as just a spare optiplex desktop and needing a dedicated box for Minecraft and valheim servers, to now having a rack in my living room with a few key things I and others rely on. You definitely aren’t alone XD

Regular, proactive work goes a long way. I also stated creating tickets for myself, each with a specific task. This way I could break things down, have reminders of what still needs attention, and track progress.

Do you host your ticketing system? I’d like to try one out. My TODO markings in my notes app don’t end up organized enough to be helpful. My experience is with JIRA, which I despise with every fiber of my being.

I have set up forgejo, which is a fork of gitea. It’s a git forge, but its ticketing system is quite good.

Oh neat, I was actually planning to set that up to store scripts and some projects I’m working on, I’ll give the tickets a try then.

Try Vikunja, it might tick the box for you.

We built Vikunja with speed in mind - every interaction takes less than 100ms.

Their heads are certainly in the right place. I’ll check this out, thank you!

Mostly I just use nextclouds deck extension. It behaves close enough to what I need as a solo operation.

My profesional experience is in systems administration, cloud architecture, and automation, with considerations for corporate disaster recovery and regular 3rd party audits.

The short answer to all of your questions boil down to two things;

1: If you’re going to maintain a system, write a script to build it, then use the script (I’ll expand this below).

2: Expect a catastrophic failure. Total loss, server gone. As such; backup all unique or user-generated data regularly, and practice restoring it.

Okay back to #1; I prefer shell scripts (pick your favorite shell, doesn’t matter which), because there are basically zero requirements. Your system will have your preferred shell installed within minutes of existing, there is no possibility that it won’t. But why shell? Because then you don’t need docker, or python, or a specific version of a specifc module/plugin/library/etc.

So okay, we’re gonna write a script. “I should install by hand as I’m taking down notes” right? Hell, “I can write the script as I’m manually installing”, “why can’t that be my notes?”. All totally valid, I do that too. But don’t use the manually installed one and call it done. Set the server on fire, make a new one, run the script. If everything works, you didn’t forget that “oh right, this thing real quick” requirement. You know your script will bring you from blank OS to working server.

Once you have those, the worst case scenario is “shit, it’s gone… build new server, run script, restore backup”. The penalty for critical loss of infrastructure is some downtime. If you want to avoid that, see if you can install the app on two servers, the DB on another two (with replication), and set up a cluster. Worst case (say the whole region is deleted) is the same; make new server, run script, restore backups.

If you really want to get into docker or etc after that, there’s no blocker. You know how the build the system “bare metal”, all that’s left is describing it to docker. Or cloudformation, terraform, etc, etc, etc. I highly recommend doing it with shell first, because A: You learn a lot about the system and B: you’re ready to troubleshoot it (if you want to figure out why it failed and try to mitigate it before it happens again, rather than just hitting “reset” every time).

I just started my mbin instance a week or two ago. When I did, I wrote a guided install script (it’s a long story, but I ended up having to blow away the server like 7 times and re-install).

This might be overkill for your purposes, but it’s the kind of thing I have in mind.

Note1: Sorry, it’s kinda sloppy. I need to clean it up before I submit a PR to the mbin devs for possible inclusion in their documentation. Note2: It assumes that you’re running a single-user instance, and on a single, small server, with no external requirements.

Not exactly self hosting but maintaining/backing it up is hard for me. So many “what if”s are coming to my mind. Like what if DB gets corrupted? What if the device breaks? If on cloud provider, what if they decide to remove the server?

Backups. If you follow the 3-2-1 backup strategy, you don’t have to worry about anything.

Isn’t it enough to have a single offsite backup?

Yeah, that’s exactly what the 3-2-1 rule says.

- 3 copies of your data

- 2 different kinds of storage media

- 1 off-site backup

Don’t over think it, start small, a home server. Then add stuff, you will see that it’s not that crazy.

I personally have just one home server that locally creates encrypted backups and uploads them to backblaze.

This gives me the privacy I need as everything is on my server that I own while also having the backups on a big reliable company.

It’s not perfect but it fits my threat model

¯\_(ツ)_/¯ Yeah. It is kinda hard.

Backups. First and foremost.

Now once that is sorted, what if your DB gets corrupted. You test your backups

Learn how to verify and restore

It is a hassle. That’s why there is a constant back and forth between on prem and cloud in the enterprise

Nothing proves a backup like forcing yourself to simulate a recovery! I like to make one setting change, then make a backup, and then delete everything and try to rebuild it from scratch to see if I can do it and prove the setting change is still there

We do a quarterly test.

I have the DB guy make a change, I nuke it and ensure I can restore it.

For us. I don’t work for Veeam, while I don’t like their licensing. Veeam is pretty good

Hth

I think “hard” is the wrong word. After all, it’s just a matter of mashing the right combination of buttons on your keyboard. It’s complicated…

I got tired of having to learn new things. The latest was a reverse proxy that I didn’t want to configure and maintain. I decided that life is short and just use samba to serve media as files. One lighttpd server for my favourite movies so I can watch them from anywhere. The rest I moved to free online services or apps that sync across mobile and desktop.

Caddy took an afternoon to figure out and setup, and it does your certs for you.

Unfortunately, I feel the same. As I observed from the commenters here, self-hosting that won’t break seems very expensive and laborious.

Reverse proxy is actually super easy with nginx. I have an nginx server at the front of my server doing the reverse proxy and an Apache server hosting some of those applications being proxied.

Basically 3 main steps:

-

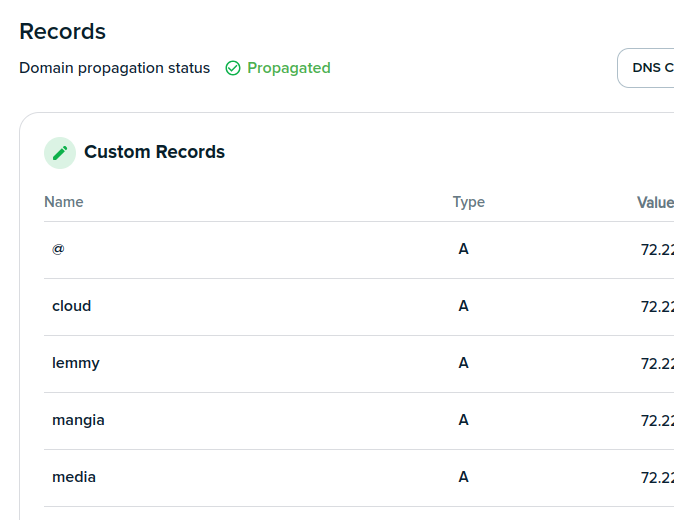

Setup up the DNS with your hoster for each subdomain.

-

Setup your router to port forward for each port.

-

Setup nginx to do the proxy from each subdomain to each port.

DreamHost let’s me manage all the records I want. I point them to the same IP as my server:

This is my config file:

server { listen 80; listen [::]:80; server_name photos.my_website_domain.net; location / { proxy_pass http://127.0.0.1:2342; include proxy_params; } } server { listen 80; listen [::]:80; server_name media.my_website_domain.net; location / { proxy_pass http://127.0.0.1:8096; include proxy_params; } }And then I have dockers running on those ports.

root@website:~$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e18157d11eda photoprism/photoprism:latest "/scripts/entrypoint…" 4 weeks ago Up 4 weeks 0.0.0.0:2342->2342/tcp, :::2342->2342/tcp, 2442-2443/tcp photoprism-photoprism-1 b44e8a6fbc01 mariadb:11 "docker-entrypoint.s…" 4 weeks ago Up 4 weeks 3306/tcp photoprism-mariadb-1So if you go to photos.my_website_domain.net that will navigate the user to my_website_domain.net first. My nginx server will kick in and see you want the ‘photos’ path, and reroute you to basically http://my_website_domain.net:2342. My PhotoPrism server. So you could do http://my_website_domain.net:2342 or http://photos.my_website_domain.net. Either one works. The reverse proxy does the shortcut.

Hope that helps!

fuck nginx and fuck its configuration file with an aids ridden spoon, it’s everything but easy if you want anything other than the default config for the app you want to serve

I only use it for reverse proxies. I still find Apache easier for web serving, but terrible for setting up reverse proxies. So I use the advantages of each one.

🤷♂️ I could spend that two hours with my kids.

You aren’t wrong, but as a community I think we should be listening carefully to the pain points and thinking about how we could make them better.

I had a pretty decent self-hosted setup that was working locally. The whole project failed because I couldn’t set up a reverse proxy with nginx.

I am no pro, very far from it, but I am also somewhat Ok with linux and technical research. I just couldn’t get nginx and reverse proxies working and it wasn’t clear where to ask for help.

I updated my comment above with some more details now that I’m not on lunch.

-

I started as more “homelab” than “selfhosted” as first - so I was just stuffing around playing with things, but then that seemed sort of pointless and I wanted to run real workloads, then I discovered that was super useful and I loved extracting myself from commercial cloud services (dropbox etc). The point of this story is that I sort of built most of the infrastructure before I was running services that I (or family) depended on - which is where it can become a source of stress rather than fun, which is what I’m guessing you’re finding yourself in.

There’s no real way around this (the pressure you’re feeling), if you are running real services it is going to take some sysadmin work to get to the point where you feel relaxed that you can quickly deal with any problems. There’s lots of good advice elsewhere in this thread about bit and pieces to do this - the exact methods are going to vary according to your needs. Here’s mine (which is not perfect!).

- I’m running on a single mini PC & a Synology NAS setup for RAID 5

- I’ve got a nearly identical spare mini PC, and swap over to it for a couple of weeks (originally every month, but stretched out when I’m busy). That tests my ability to recover from that hardware failure.

- All my local workloads are in LXC containers or VM’s on Proxmox with automated snapshots that are my (bulky) backups, but allow for restoration in minutes if needed.

- The NAS is backed up locally to an external USB that’s not usually plugged in, and to a lower speced similar setup 300km away.

- All the workloads are dockerised, and I have a standard directory structure and compose approach so if I need to upgrade something or do some other maintenance of something I don’t often touch, I know where everything is with out looking back to the playbook

- I don’t use a script or Terrafrom to set those up, I’ve got a proxmox template with docker and tailscale etc installed that I use, so the only bit of unique infrastructure is the docker compose file which is source controlled on Forgejo

- Everything’s on UPSs

- A have a bunch of ansible playbooks for routine maintenance such as apt updates, also in source control

- all the VPS workloads are dockerised with the same directory structure, and behind NGINX PM. I’ve gotten super comfortable with one VPS provider, so that’s a weakness. I should try moving them one day. They are mostly static websites, plus one important web app that I have a tested backup strategy for, but not an automated one, so that needs addressed.

- I use a local and an external UptimeKuma for monitoring, enhanced by running a tiny server on every instance that just exposes a disk free and memory free api that can be consumed by Uptime.

I still have lots of single points of failure - Tailscale, my internet provider, my domain provider etc, but I think I’ve addressed the most common which would be hardware failures at home. My monitoring is also probably sub-par, I’m not really looking at logs unless I’m investigating a problem. Maybe there’s a Netdata or something in my future.

You’ve mentioned that a syncing to a remote server for backups is a step you don’t want to take, if you mean managing your own is a step you don’t want to take, then your solutions are a paid backup service like backblaze or, physically shuffling external USB drives (or extra NASs) back and forth to somewhere - depending on what downtime you can tolerate.

TrueNAS scale helps a lot, as it makes many popular apps just a few clicks away. Or for more power-users, stuff like the linux cockpit also really helps.

To directly answer your questions…

- In the event of DB corruption (which hasn’t happened to me yet) I would probably rollback that app to the previous snapshot. I suspect that TrueNAS having ZFS as an underlayment may help in this regard, as it actually detects bitrot and bitflips, which may be the underlying cause of such corruption.

- In the case where a device breaks… if it’s a hard drive that broke, I just pop in a new one and add it to the degraded mirror set. If it’s “something else” that broke, my plan is to pop one of the mirror shards into a spare PoS computer (as truenas scale runs on common x86 hardware) and deal with the ugly-factor until I repair or replace the bigger issue.

- The only way to defend against a cloud provider is replication, so plan accordingly if that is a concern.

- If by “sync’d confidentially” you mean encrypted in transit, I’m pretty sure that TrueNAS has built in replication over SSH. If you meant TNO, then you probably want to build your setup over a cryfs filesystem so no cleartext bits hit the cloud, although on second thought… it’s not really meant for multi-master synchronization… my case just happens to fit it (only one device writes)… so there is probably a better choice for this.

- Setup is a hassle? Yes… just be sure that you invest that hassle into something permanent, if not something like a TrueNAS configuration (where the config gets carried along for the ride with the data) then maybe something like ansible scripts (which is machine-readable documentation). Depending on your organization skills, even hand-written notes or making your own “meta” software packages (with only dependencies & install scripts) might work. What you don’t want to do is manually tweak a linux install, and then forget what is “special” about that server or what is relying on it.

- How safe is my setup? Depends… I still need to start rotating a mirror shard as an offsite backup, so not very robust against a site disaster; Security-wise… I’ve got a lot of private bits, and it works for my needs… as far as I know :)

- Still enthusiastic? I try to see everything as both temporary and a work-in-progress. This can be good in ways because nothing has to be perfect, but can be bad in ways that my setup at any given time is an ugly amalgamation of different experimental ideas that may or may not survive the next “iteration”. For example, I still have centos 7 & python 2 stuff that needs to be migrated or obsoleted.

As an alternative, Unraid. While it’s paid, it strips away a lot of the hassle you mentioned in your post. Has a built in shop where you just click, set up ports/shares and docker containers just spin up for you.

While I’m not a huge fan of their recent subscription model change, I do love their OS (I got I’m still grandfathered into the pre-existing perpetual license.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System Git Popular version control system, primarily for code HA Home Assistant automation software ~ High Availability HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol LVM (Linux) Logical Volume Manager for filesystem mapping LXC Linux Containers NAS Network-Attached Storage PSU Power Supply Unit Plex Brand of media server package RAID Redundant Array of Independent Disks for mass storage RPi Raspberry Pi brand of SBC SBC Single-Board Computer SSH Secure Shell for remote terminal access VPS Virtual Private Server (opposed to shared hosting) ZFS Solaris/Linux filesystem focusing on data integrity nginx Popular HTTP server

15 acronyms in this thread; the most compressed thread commented on today has 3 acronyms.

[Thread #821 for this sub, first seen 21st Jun 2024, 17:05] [FAQ] [Full list] [Contact] [Source code]

Not safe at all. I look for robustness. I prefer thinking about things that do not break easily (like ZFS and RAIDZ) instead of “what could possibly go wrong”

And I have never quite figured out how to do restores, so I neglect backups as well.